Last updated on 8 months ago

使用场景 什么是gc 流程 Garbage Collection缩写GC,简称垃圾回收;

在ceph 中,经常会有删除文件的操作,删除一个文件并不是同步删除,而是采用异步方式,rgw是通过 gc回收机制(周期性)异步删除对象的;

常规删除场景下: 删除一个obj,并不是马上释放空间,而是将要删除的对象信息,加入到 gc队列中,gc线程(一个rgw对应一个gc 线程)会周期性的释放磁盘空间,每次释放多少空间也有限定;

先看看效果

1 2 3 4 5 6 [root@node86 out]# s3cmd put mgr.x.log s3:'mgr.x.log' -> 's3://hrp/mgr.x.log' [1 of 1 ]65016842 of 65016842 100 % in 1 s 39.40 MB/s done's3://hrp/mgr.x.log'

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root@node86 build]# rados listomapvals -p expondata.rgw.log -N gc gc.9 |more 48 bytes):00000000 30 5f 62 34 63 34 66 38 63 31 2 d 33 62 35 64 2 d |0 _b4c4f8c1-3b 5d-|00000010 34 63 63 37 2 d 61 63 36 61 2 d 61 30 30 30 37 31 |4 cc7-ac6a-a00071|00000020 66 63 30 32 62 65 2 e 38 34 31 31 31 2 e 34 37 00 |fc02be.84111 .47 .|00000030 4086 bytes) :00000000 01 01 f0 0f 00 00 2 e 00 00 00 62 34 63 34 66 38 |..........b4c4f8|00000010 63 31 2 d 33 62 35 64 2 d 34 63 63 37 2 d 61 63 36 |c1-3b 5d-4 cc7-ac6|00000020 61 2 d 61 30 30 30 37 31 66 63 30 32 62 65 2 e 38 |a-a00071fc02be.8 |00000030 34 31 31 31 2 e 34 37 00 01 01 b0 0f 00 00 10 00 |4111.47 .........|000000e0 2 d 33 62 35 64 2 d 34 63 63 37 2 d 61 63 36 61 2 d |-3b 5d-4 cc7-ac6a-|000000f 0 61 30 30 30 37 31 66 63 30 32 62 65 2 e 31 34 31 |a00071fc02be.141 |00000100 31 33 2 e 31 5f 5f 73 68 61 64 6f 77 5f 6 d 67 72 |13.1 __shadow_mgr|00000110 2 e 78 2 e 6 c 6f 67 2 e 38 32 46 32 35 2 d 43 6b 6 e |.x.log .82F 25-Ckn|00000120 78 55 50 67 56 30 49 39 4 a 4 d 66 4 c 71 77 30 35 |xUPgV0I9JMfLqw05|00000130 34 6 e 53 7 a 38 75 5f 30 00 00 00 00 02 01 f4 00 |4 nSz8u_0........|00000140 00 00 1 a 00 00 00 65 78 70 6f 6 e 64 61 74 61 2 e |......expondata.|00000150 72 67 77 2 e 62 75 63 6b 65 74 73 2 e 64 61 74 61 |rgw.buckets.data|

用 radosgw-admin gc list –include-all 也可以可能到 gc 队列里面的 ( 没加 –include-all,则是看到当前进行中的)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@node86 build]# radosgw-admin gc list --include -all "tag" : "b4c4f8c1-3b5d-4cc7-ac6a-a00071fc02be.84111.47\u0000" ,"time" : "2024-03-03 03:19:15.0.059423" ,"objs" : ["pool" : "expondata.rgw.buckets.data" ,"oid" : "b4c4f8c1-3b5d-4cc7-ac6a-a00071fc02be.14113.1__shadow_mgr.x.log.82F25-CknxUPgV0I9JMfLqw054nSz8u_0" ,"key" : "" ,"instance" : "" "pool" : "expondata.rgw.buckets.data" ,"oid" : "b4c4f8c1-3b5d-4cc7-ac6a-a00071fc02be.14113.1__shadow_mgr.x.log.82F25-CknxUPgV0I9JMfLqw054nSz8u_15" ,"key" : "" ,"instance" : ""

此时底层是还有数据的, 我们手动触发下gc 流程 看看效果

radosgw-admin gc process --include-all

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 # rados -p expondata.rgw.buckets.data ls |grep mgr -3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_14-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_6-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_12-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_2-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_4-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_3-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_8-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_9-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_5-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_0-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_1-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_11-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_10-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_15-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_13-3b 5d-4 cc7-ac6a-a00071fc02be.14113 .1 __shadow_mgr.x.log .82F 25-CknxUPgV0I9JMfLqw054nSz8u_7# radosgw-admin gc process --include -all 2024 -03 -03 01 :44 :18.454 7f e7a062bb00 0 svc_http_manager.cc:52 :do_start:start http managers2024 -03 -03 01 :44 :19.450 7f e7a062bb00 0 svc_http_manager.cc:39 :shutdown:shutdown http manager2024 -03 -03 01 :44 :19.466 7f e7a062bb00 0 svc_http_manager.cc:39 :shutdown:shutdown http manager# date; rados -p expondata.rgw.buckets.data ls |grep mgr 3 01 :44 :21 EST 2024 2024 -03 -03 01 :44 :21.861 7f 6dbaadcd00 -1 ceph_context.cc:385 :handle_conf_change:WARNING: all dangerous and experimental features are enabled.2024 -03 -03 01 :44 :21.908 7f 6dbaadcd00 -1 ceph_context.cc:385 :handle_conf_change:WARNING: all dangerous and experimental features are enabled.2024 -03 -03 01 :44 :21.943 7f 6dbaadcd00 -1 ceph_context.cc:385 :handle_conf_change:WARNING: all dangerous and experimental features are enabled.#gc 队列里面也没有了 # date; radosgw-admin gc list --include -all 3 01 :44 :42 EST 2024 2024 -03 -03 01 :44 :42.491 7f 30a3fdfb00 0 svc_http_manager.cc:52 :do_start:start http managers2024 -03 -03 01 :44 :42.724 7f 30a3fdfb00 0 svc_http_manager.cc:39 :shutdown:shutdown http manager2024 -03 -03 01 :44 :42.739 7f 30a3fdfb00 0 svc_http_manager.cc:39 :shutdown:shutdown http manager

什么场景下会出现gc 流程? 在RGW中GC一般都是指一些异步的磁盘空间回收操作

客户端执行删除Object操作,对应的Object所占用的磁盘空间会交由后台GC处理。

客户端执行Object覆盖写入操作,旧Object相关的空间需要释放。

客户端执行上传操作(分块上传),上传过程中会产生一些shadow文件,这些上传过程中产生的临时数据也会纳入GC的回收

gc 模块怎么初始化的? gc模块是在 rgw启动的时候 初始化, 启动的时候会在 log池创建 rgw_gc_max_objs 个对象(默认32个),最大可以 设置 65521 个,gc obj 用来存放 待回收数据的元数据信息(上面也提及了)

对象命名方式为 gc.<num>

待回收的数据会hash到不同的 gc obj上,用来 提高系统的性能(shard 的思想 在 bucket list 优化中也有用到,后续解释bucket shard 的时候会深入介绍)

1 2 3 4 5 6 # ceph daemon out/radosgw.8000.asok config show|grep rgw_gc_max_objs "rgw_gc_max_objs" : "1" ,# rados ls -p expondata.rgw.log -N gc .0

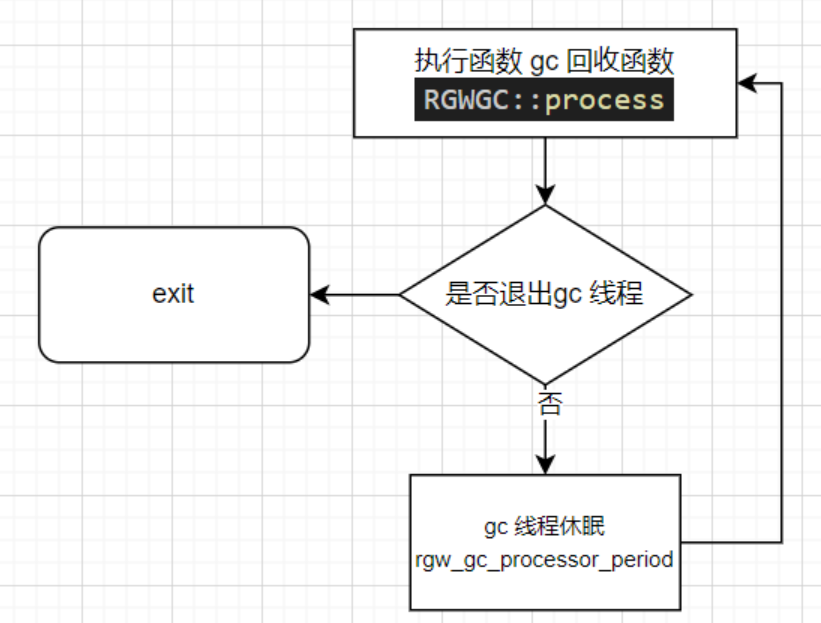

创建 gc 对象后,会启动一个线程, 启动过程和其他组件模块一样,这里不在叙述,主要介绍 gc 线程的entry 函数, rgw_gc_processor_period 参数决定了 多长时间执行一次gc 函数(默认 2h),休眠时采用互斥锁加条件变量的方式

gc 回收函数里面做了什么? RGWGC::process 指定了 gc 流程要执行多久的时间,可以用参数 rgw_gc_processor_max_time 来限定RGWGC::process

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 int RGWGC::process (bool expired_only) int max_secs = cct->_conf->rgw_gc_processor_max_time;const int start = ceph::util::generate_random_number(0 , max_objs - 1 );io_manager (this, store->ctx(), this) ;for (int i = 0 ; i < max_objs; i++) {int index = (i + start) % max_objs;int ret = process(index, max_secs, expired_only, io_manager);if (ret < 0 )return ret;if (!going_down()) {return 0 ;

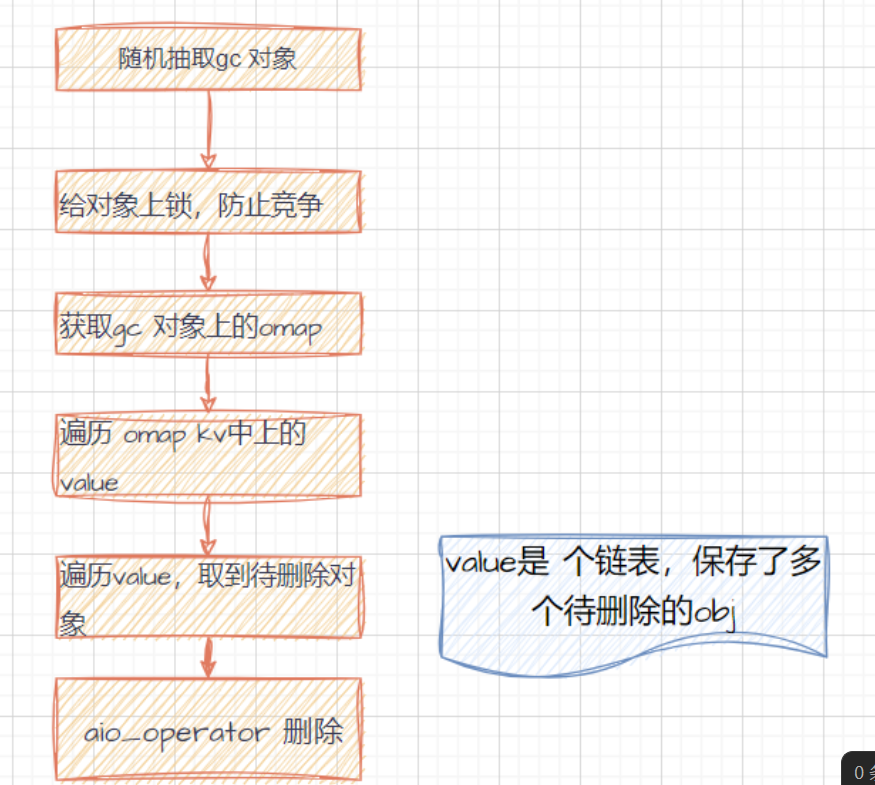

gc 怎么删除数据的? 先看整体流程

首先ceph 提供的cls 接口 获取 gc对象上的 omap ,value 是一个list (我们先不要关注这tag 是什么)

1 2 3 4 5 6 { "pool" : "expondata.rgw.buckets.data" , "oid" : "b4c4f8c1-3b5d-4cc7-ac6a-a00071fc02be.14113.1__shadow_mgr.x.log.82F25-CknxUPgV0I9JMfLqw054nSz8u_15" , "key" : "" , "instance" : "" }

根据value 提供的名字,和池名字,构建 op

1 2 3 4 5 6 7 8 9 10 11 12 13 int RGWGC::process () {true );

schedule_io

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 int schedule_io (IoCtx *ioctx, const string & oid, ObjectWriteOperation *op, int index, const string & tag) {while (ios.size() > max_aio) {if (gc->going_down()) {return 0 ;NULL , NULL , NULL );int ret = ioctx->aio_operate(oid, c, op);if (ret < 0 ) {return ret;return 0 ;

handle_next_completion

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 void handle_next_completion () {int ret = io.c->get_return_value();if (ret == -ENOENT) {0 ;if (io.type == IO::IndexIO) {if (ret < 0 ) {0 ) << "WARNING: gc cleanup of tags on gc shard index=" <<" returned error, ret=" << ret << dendl;goto done;if (ret < 0 ) {0 ) << "WARNING: gc could not remove oid=" << io.oid <<", ret=" << ret << dendl;goto done;

删除数据后那些 oamp是怎么删除的呢?

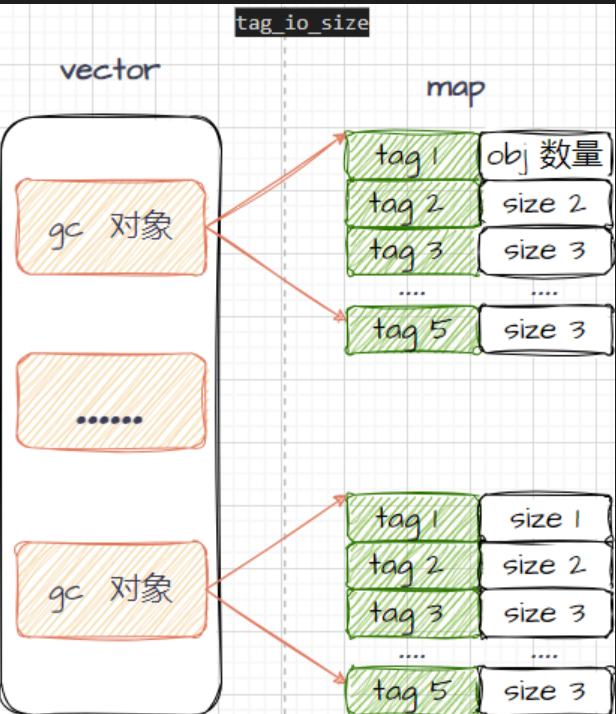

当遍历 vlaue的时候,会oamp 的key (tag)加入到一个vector的map中vector<map<string, size_t> > tag_io_size;

1 2 3 4 5 6 7 io_manager.add_tag_io_size(index, info.tag, chain.objs.size());void add_tag_io_size (int index, string tag, size_t size) {auto & ts = tag_io_size[index];

加入后作用是?

在 handle_next_completion 处理一个gc op的回调,最后会尝刷新 tag_io_size

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 void schedule_tag_removal (int index, string tag) {auto & ts = tag_io_size[index];auto ts_it = ts.find(tag);if (ts_it != ts.end()) {auto & size = ts_it->second;if (size != 0 )return ;auto & rt = remove_tags[index];if (rt.size() >= (size_t )cct->_conf->rgw_gc_max_trim_chunk) {

flush_remove_tags

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 void flush_remove_tags (int index, vector <string >& rt) {auto rt_guard = make_scope_guard(int ret = gc->remove(index, rt, &index_io.c);

gc list 里面的数据(gc上的omap )怎么产生的?

以删除对象为例,

1 2 3 4 5 int RGWRados::Object::Delete::delete_obj(){int ret = target->complete_atomic_modification();

complete_atomic_modification

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 int RGWRados::Object::complete_atomic_modification()if (!state->has_manifest || state->keep_tail)return 0 ;if (chain.empty()) {return 0 ;string tag = (state->tail_tag.length() > 0 ? state->tail_tag.to_str() : state->obj_tag.to_str());return store->gc->send_chain(chain, tag, false );

这个tag 怎么来的? 从头捋了下,发现 tag 就是req->iq,用tag 作为gc对象上omap的索引应该是为了保证唯一性;

req->id

1 2 3 4 int process_request2 d94c7b3-a7d2-4365 -b325-6e617 d2f267e.4236 .3

构造 处理obj的类时: unique_tag = s->req_id

1 2 3 4 5 6 7 8 9 10 11 12 AtomicObjectProcessor(Aio *aio, RGWRados *store,const RGWBucketInfo& bucket_info,const rgw_placement_rule *ptail_placement_rule,const rgw_user& owner,const rgw_obj& head_obj,std ::optional<uint64_t > olh_epoch,const std ::string & unique_tag)

shadow对象写完后, 构造元数据时 obj_op.meta.ptag 为 unique_tag

1 2 3 4 5 6 int AtomicObjectProcessor::complete{

state->write_tag

1 2 3 4 5 6 7 RGWRados::Object::prepare_atomic_modification{1 );

所以 taif_tag = s->req_id

gc 的整体流程并不是很复杂,思路也很清晰,但是里面的细节也很多,编码上时有很多学习的地方,比如 omap上的回收,并不是删除一条就来一次io,而是攒起来,统一下发,以减少性能的消耗;以及shard 的思想,用多个shard 提高并发;此外设计到很多cls的操作,没展开讲(单独一个章节介绍);

对于 gc优化,如果业务场景对存储空间比较敏感,可以调节gc触发的时间,和执行时长,但这些操作还得根据业务压力作为判断

相关引用:https://www.yisu.com/zixun/554091.html https://bean-li.github.io/multisite-put-obj/

获取到omap后,遍历value, value 包含了

获取到omap后,遍历value, value 包含了 `

`